New ACI lab

Earlier this evening I sent out a tweet with a photo depicting the new ACI lab we received at Axians (my employer). I thought I’d share some more details about this lab with everybody who’s interested.

Shiny new equipment. 😎 Look at our awesome new #ACI lab. Going to be a multi-site setup.

— Michael van Kleij (@mvankleij_nl) January 23, 2020

This is going to be super helpful in servicing our customers. @AxiansNL #Cisco pic.twitter.com/h2hx2RWY3T

Goal

One aspect of my job is to design, build and implement ACI networks for our customers. Our lab needs to be able to support the most commonly used features in ACI. This also sets the requirements for the new lab. The new lab will be a replacement for the current lab. Currently we have a lab running Gen1 hardware, which will soon be unsupported by Cisco and the newest ACI releases. This was the primary driver to get a new lab.

But, just Life Cycle Management wasn’t enough in this case. The old lab used to be a single pod environment. About three years ago we turned this into a multi-pod environment. Customers were asking for multi-pod deployments and we needed a lab that was capable of supporting us and our customers with this technology.

ACI development is still ongoing at a rapid pace. Each major release features new possibilities. These possibilities increasingly required Gen2 hardware, whereas our current lab was still running Gen1 hardware. This meant that we could not always depend on our lab for these features.

The new lab needed to be able to support these new features. One of the most important possibilities in ACI that require Gen2 hardware is Multi-Site and ACI anywhere. The extension of ACI into the public cloud is one of the features that are driving adoption of ACI multi-site deployments. (before this feature many customers did not need more than multi-pod)

Another important goal of this lab is that it needs to be able to support customers when they have issues. We won’t ever be able to replicate the complete production environments of our customers in our lab, as this would require many many switches of different types, but being able to implement each feature that the customer is using is important.

Design

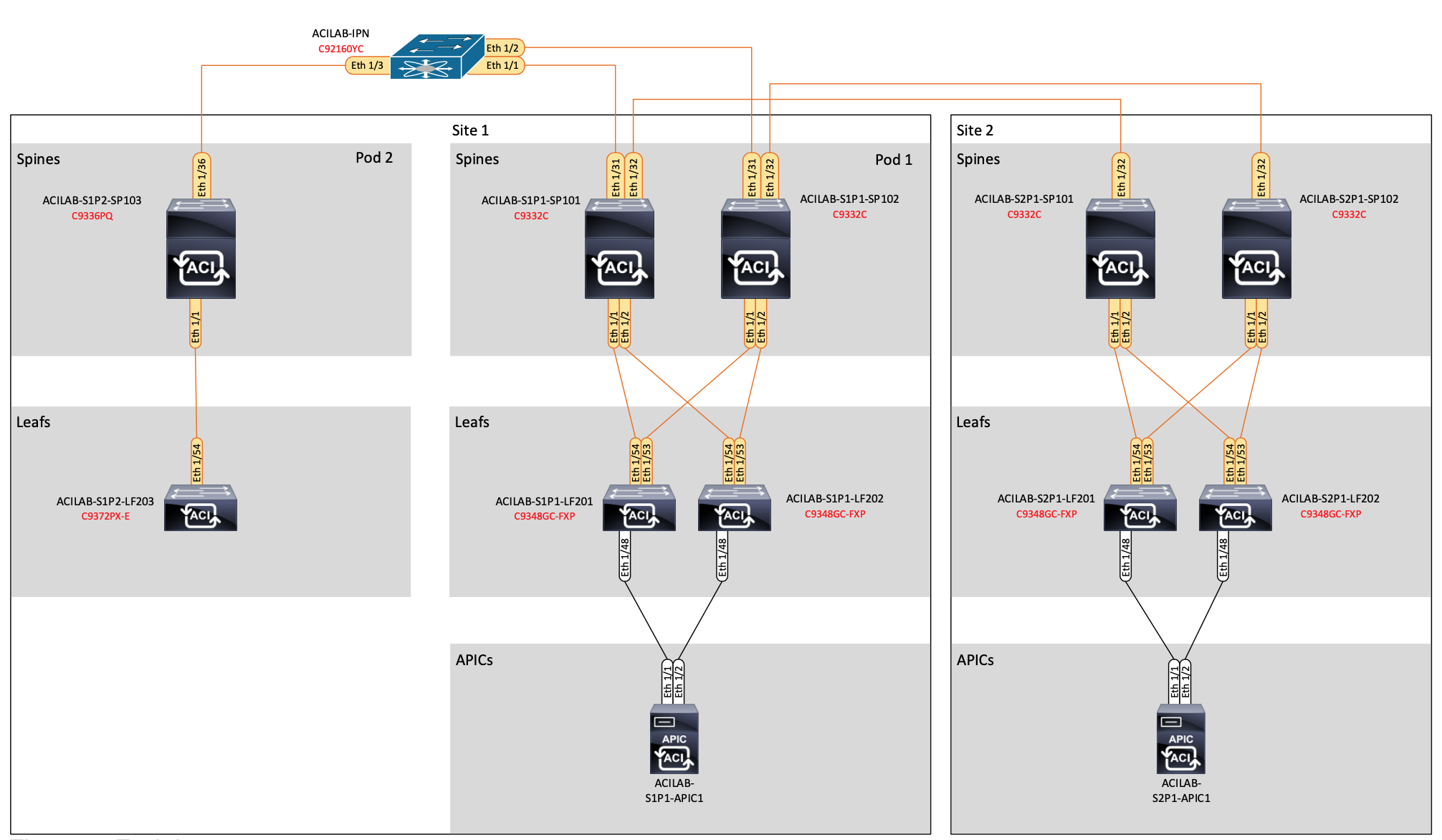

The design of the lab will be fairly straight forward. We will be creating a combination of a multi-site with a multi-pod environment. This gives us the possibility to test a lot of different scenarios our customers might be facing.

The physical topology is depicted below. This will be the first stage of the design. The multi-pod will initially be created with older switches. This gives us the possibility to keep supporting Gen1 hardware while our customers are still running Gen1. Once Gen1 has dropped completely we will replace those switches with Gen2 hardware.

The topology will consist of the following hardware:

- 4x N9K-C9332C Spine switches (2 per Site)

- 4x N9K-C9348GC-FXP Leaf switches (2 per Site)

- 1x N9K-C9336PQ Spine switch (only Pod 2 of Site 1)

- 1x N09K-C9372PX-E Leaf switch (Only Pod 2 of Site 1)

The C9332C switches will be connected back to back for the multi-site connectivity. The multi-pod IPN will be created with a single N9K-C92160YC switch. This switch will also double as the router on which L3out connections will be terminated.

Within this fabric each ACI team member will have his/her own tenant. Most of these tenants will be managed using the multi-site orchestrator. If we need to test things for a customer we will create a new tenant for that customer that replicates their configuration (as far as possible). These tenants will most likely not be managed by MSO as many customers are not yet using MSO.

Since it is a lab we need to be able to reconfigure it as needed in case of issues or design tests. This is OK, but we also need to take care to restore the lab to its original design as soon as possible. For now we will be using the backups of the fabric for this, but it’s likely to be automated somewhere in the future.

There will be some UCS servers attached to the fabric to use for VMM integration and client simulation. We will also be running a selection of virtual firewalls and loadbalancers to be able to perform tests with L4-L7 service integration and PBR.