ACI Tenant Policy Model (or ACI Logical Constructs)

Cisco ACI is a policy based fabric. This means that the complete environment is modelled in objects. When you look at the ACI fundamentals guide you’ll find the model explained in steps.

This post covers the tenant policy model. The tenant policy model is a part of the overall model directly located under the root of the model. This shows that the tenant policy model is one of the most important parts of ACI. When operating an ACI fabric on a day to day basis the tenant policy model is the part of the fabric that you will touch the most.

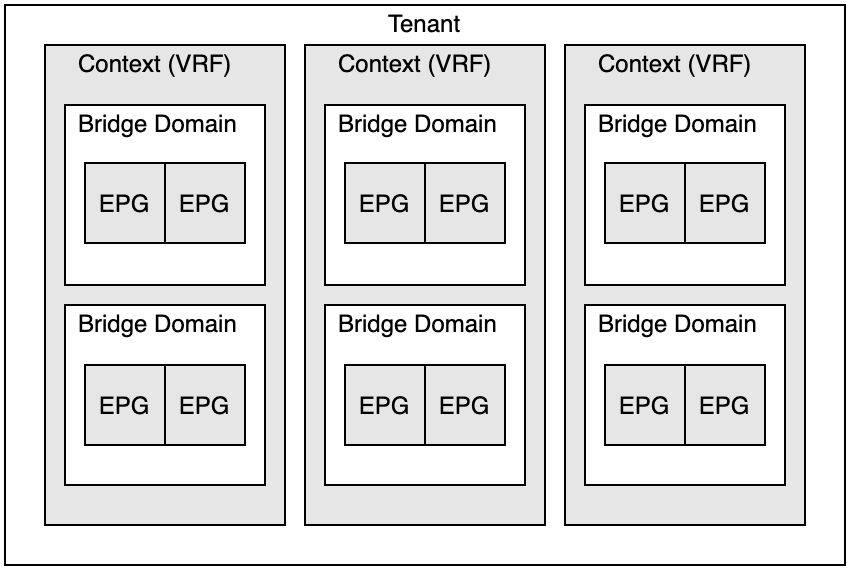

When I’m at a customer that is starting with ACI and I have to explain the terminology of ACI, I like to use the image below.

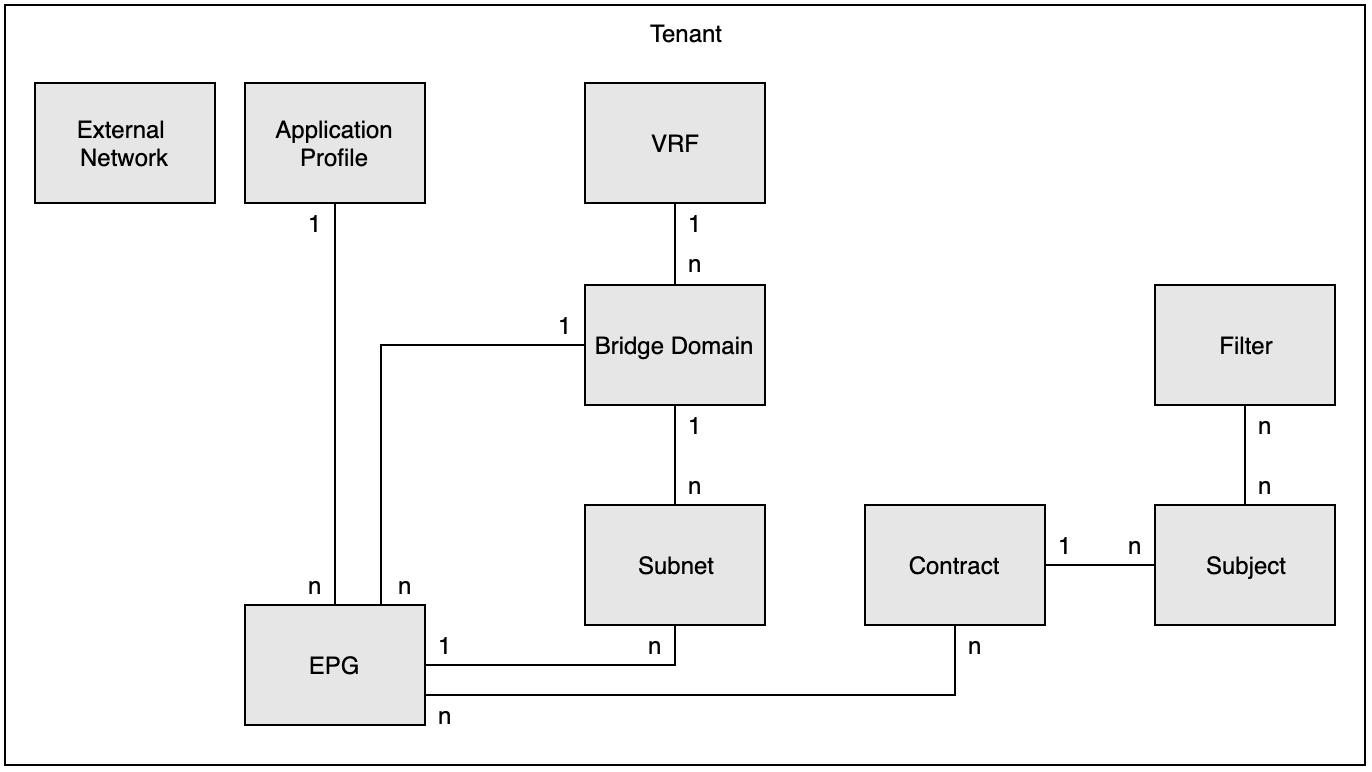

Unfortunately, though the image above will help me explain the tenant policy model to my customers it is incomplete. The model as shown in the ACI fundamentals guide is also not entirely correct. I’ve made a small correction to the model as the one on the Cisco page doesn’t show the possibility of having a subnet configured under the EPG. The image below does show that possibility.

Tenants

Both diagrams show the tenant as the top level. In ACI you have at least three tenants, but without a fourth tenant the fabric is mostly useless. This is because you get the first three for free:

- common: The common tenant is usually used as a shared services tenant. Objects created inside the common tenant are available to other tenants.

- infra: The infra tenant is used to expand the infrastructure. Multi-Pod and Multi-Site fabrics use the infra tenant to connect the pods or sites to each other.

- mgmt: Most of the management configuration is performed in the management tenant. Here you’ll assign management IP addresses to switches and configure the contracts that will limit access to the fabric management interfaces.

You will probably want to make an additional tenant for your environment. A tenant in ACI is an administrative boundary. It’s not a technical boundary. This means that there is no actual network construct which matches the tenant. Tenants are primarily used to create domains for access control (using RBAC), but you can also create tenants to organize your fabric in a logical manner (for you).

If you’re using Kubernetes integration ACI will create a separate tenant for each Kubernetes environment.

In my personal experience it helps to minimize the amount of tenants in your fabric, especially if they fall under the same administrative domain. There is no reason to create a lot of tenants if you are the one managing them all. However, some companies like to separate Test, QA and Production environments and tenants can be used for that purpose. When these tenants need to talk to each other you can export the contracts to enable them to do so, but this creates quite a lot of complexity.

Contexts

Within a tenant you will need at least one Context (also called VRF) to contain layer 3 domains. The context directly maps to the VRF (lite) concept in classical networks. So, this means that a Context is a separate layer 3 domain. It also means that within VRFs IP’s must be unique, but between two VRFs they might overlap.

In classic networks VRFs are often used to separate security domains on a single device. The VRF keeps IP traffic from being able to reach each other without using some bridging technique such as route leaking or a firewall that bridges the two VRFs. In ACI you don’t need to use VRFs for this reason. By default ACI is a closed network (default deny). This means that unless specifically allowed, devices in different groups (lets think of those groups as vlans for now) won’t be able to talk to each other. Putting two groups into different VRFs to prevent them from talking to each other therefore isn’t required anymore.

Usually there are two reasons to implement different VRFs in an ACI environment:

- You need overlapping IP spaces.

- You want to mirror the existing network into ACI using a firewall to stitch the VRFs together.

Bridge Domains

The Bridge Domain is first concept which doesn’t really exist within classical switching that has influence on how traffic is handled on the network. (Tenants also don’t really exist in classical networks, but the tenant is just an administrative boundary).

The bridge domain is the layer two domain in ACI. Everything in a BD is layer two adjacent. A bridge domain is a member of a VRF, even if there is no IP configuration on the bridge domain (called a subnet, which is discussed in the next paragraph).

The configuration of a BD has a lot of impact on the way the network behaves and the way endpoints are learned. The endpoint learning concept in ACI is big enough to warrant its own chapter, so I won’t cover it here in great detail. For now, what’s important is that you can configure a BD to behave as if it were a regular vlan in a classic switching infrastructure. On the other hand you can also configure a BD to behave in a ACI specific way. This causes the BD to implement several optimizations in traffic handling which will cause BUM traffic to have less of an impact on the network.

Subnets

A subnet is what it says it is. This can be configured under the BD. A bridge domain can have zero or more subnets, but it needs to have at least one subnet if it is to perform routing for the hosts residing in the BD. There are several types of Subnets:

- Private: These subnets are local to the VRF and will not be advertised outside of the fabric.

- Public: These subnets are available for advertisement outside of the fabric.

- Shared: These subnets will be shared between VRFs inside of the fabric.

You can combine the Shared subnet option with either Private or Public subnets. However, Private and Public subnets can’t be combines (which should be obvious).

Subnets are most often seen as part of a BD. Which is a logical way to look at them. It is however also possible to configure subnets directly at the EPG level. This is actually required if you want to advertise the subnet between VRFs.

Keep in mind that when configuring a subnet at the BD level you can use the same subnet in all EPGs that are member of that BD. However, if you configure the subnet at the EPG level only that specific EPG can use the subnet, regardless of the amount of EPGs that are part of the same BD.

EPG

The EPG is the most important construct in ACI. Virtually everything is an EPG, but what is an EPG?

EPG stands for End Point Group. A group of endpoints. The definition of endpoint itself can be fairly broad. It can be anything that communicates on the network.

An EPG is always member of a Bridge Domain. An EPG can’t be member of multiple Bridge Domains, but multiple EPGs can be member of the same BD. Communication between EPGs is blocked by default. To allow commmunication you need either configure the EPG to be a member of a preferred group, which enables free communication between all EPGs that are member of the same preferred group. You can also configure the VRF to be ‘unenforced’, which causes the whole VRF to allow traffic between EPGs that are member of the VRF. These are valid options, but if you want to maintain control over which traffic is exchanged between EPGs you will use contracts.

As of ACI 5.0 there’s a new construct called ESG. I describe this construct in another post, which can be found here: Endpoint Security Groups Explored

Contracts

A contract is the object which is used to enable communication between two EPG’s. In that regard it is like an ACL. Whereas by default EPG’s can’t communicate with each other the contracts enables certain types of traffic.

It is also unlike an ACL in that it applies to an EPG as a whole. You can’t specify specific IP addresses as source and destination.

A contract needs to be provided by an EPG and consumed by another EPG to allow traffic. Sometimes these terms might be confusing, so I’ll try my best to explain them.

When an EPG provides a contract that means that the services specified in the contract (using subjects and filters) are provided by the EPG. Say for example you have a Webserver in an EPG. We’ll call this EPG “WEB”. This server provides two services, HTTP and HTTPS. This means that we will define a contract that specifies these services. The “WEB” EPG then provides this contract.

Another endpoint, located in another EPG needs to load a webpage from the webserver. Before it can do that it needs to consume the contract which is provided by the “WEB” EPG. As soon as it does so it can communicate with the webserver on the ports specified in the contract.

To allow a contract to work it has to contain a subject and filters. These define the type of traffic that is allowed (or disallowed when using Taboo contracts). Furthermore, the contract needs to be provided by an EPG and it needs to be consumed by another EPG. In this case the EPG that is providing the contract also provides the service. So for example, say I’ve got a web server and a client. Both are in their own respective EPGs. The server EPG must be the one providing the Web contract. The client EPG must consume this contract.

Contracts are used for more than ‘just’ allowing traffic between EPGs. They are also used to integrate L4-L7 services into the fabric and to limit the scope of route leaking between Contexts. We’ll zoom in to contracts in a separate chapter.

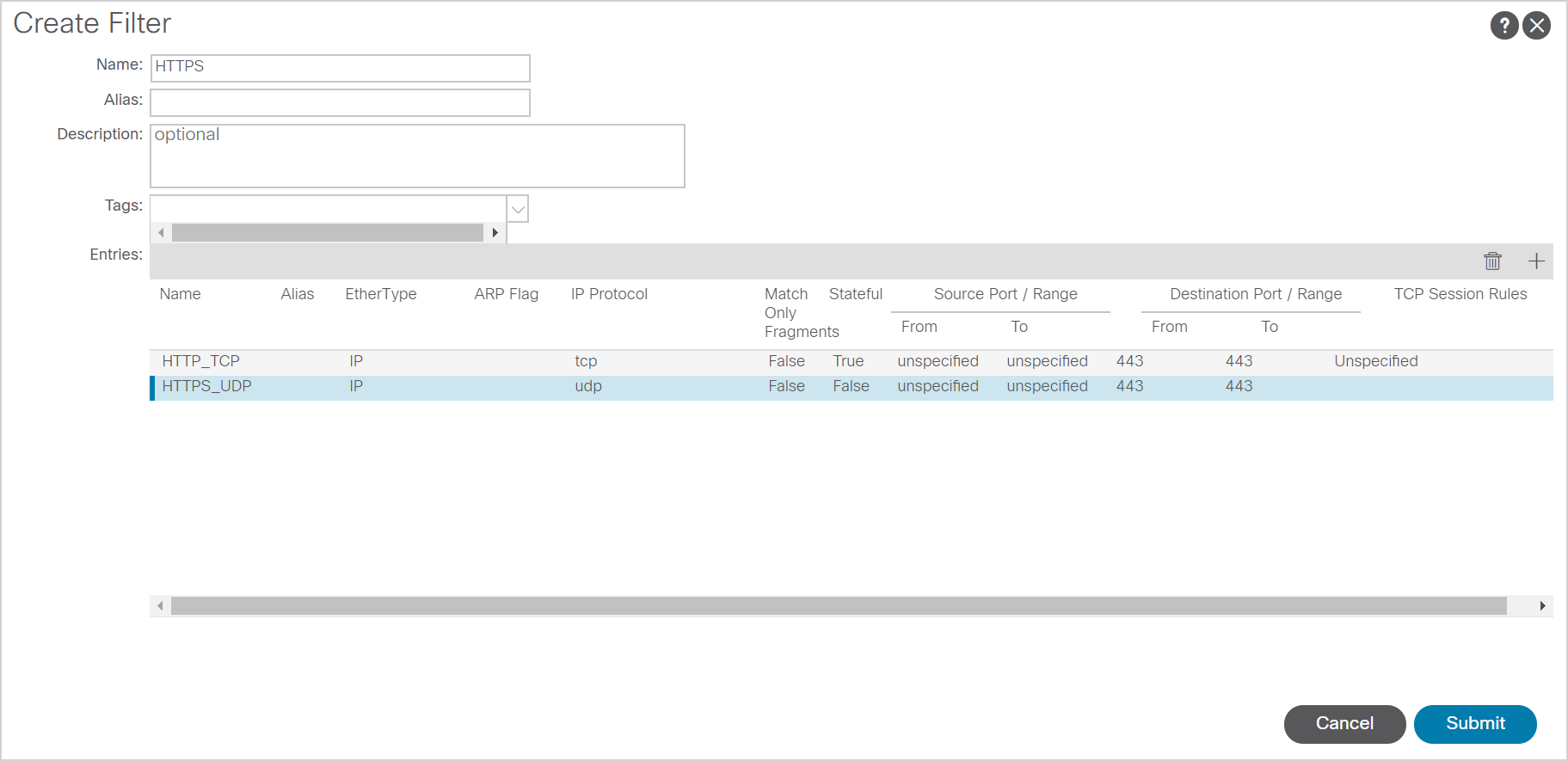

Filters

A filter is a set of rules that define the traffic on which to match. A filter can contain one or more entries, but it is recommended to keep them as simple as possible. (Keep them focussed on a protocol). For example, DNS. DNS uses port 53 on both TCP and UDP. It would be great to create a filter that contains both TCP and UDP port 53.

Filter entries can be configured to be stateful. This means that the fabric will monitor for the 3-way handshake in TCP sessions to establish correct filtering and session setup.

Subjects

Subjects are another layer of abstraction available on ACI to build complex contracts. Subjects allow you to apply the filters and to choose whether you apply them in both directions or not, configure DSCP and much more.

External Network

Remember when I said that all EPG’s must be part of a Bridge Domain? That’s always true, except when it isn’t. External networks are in fact a kind of EPG, but they aren’t part of a BD. External networks are what you define as EPG’s on a L3out network.

When you configure ACI to be able to communicate with the outside world using a layer 3 connection you’re creating a L3out. Within this L3out you need to define External Networks.

Since ACI 4.2 the External Networks part of an L3out is called ‘External EPG’, before that it was just called ‘Network’.

Application Profile

The last object in the drawing is the application profile. An EPG is part of an Application Profile. If you’re working in a network centric environment, the application profile is, in fact, just a container for EPGs. However, when running in application centric mode, application profiles become much more important. Now they are the containers for the application, which makes a big difference in how you should view them.

The idea behind this is that you group all components of the application together in an application profile. This enables you (and the ACI fabric) to monitor the health of the various components which are part of the application. You can also define specific monitoring policies or QoS policies per application profile. This enables you to have a much more strict monitoring policy on your business critical applications, while supporting applications are monitored less closely.