ACI Release 5.2 New Features

ACI 5.2 has been released in the summer of 2021. Recently (october 18th) version 5.2(3) has been released. That version has been earmarked by Cisco for a long time to become the recommended, long term support release. At the moment I’m writing this it hasn’t gotten the designation “Recommended” yet, but that will likely happen in the next few days to weeks.

One of the most important things is that for ACI 5.2 I don’t expect any major new features to be added to the software anymore. As soon as the version is recommended it will become a maintenance release, so new features are not to be expected. Version 5.2(3) however did get some major new features (even compared to 5.2(1)) which I would like to discuss in this post.

#Cisco #ACI version 5.2(3) has been released. 5.2(3) or 5.2(4) will likely become the next recommended release. I will soon release a "whats new post", but for now these two new features caught my eye:

— Michael van Kleij (@mvankleij_nl) October 19, 2021

- Back to back multi-pod

- BGP IPN support#CiscoChampion pic.twitter.com/ookqD7xEmf

On October 19th I tweeted about two of the features of note. This post will cover those features, but ACI 5.2 is a big release:

- Support for the new Nexus N9K-9332D-GX2B switch as both spine and leaf switch

- SMU support

- ESG membership on additional parameters

- A new contract view in the APIC GUI

- Remote APIC clusters

- Peer to peer link support for remote physical leafs

- Back to back multi-pod

- BGP support for the IPN

- Various additional security features

Please read my other two posts about new features in ACI 5.0 and 5.1:

Hardware support

ACI version 5.2 supports the new Nexus N9K-9332D-GX2B switch. This is a 32 port 400G leaf or spine switch. This is massive and primarily focused on large providers and the like. I don’t think I will encounter this switch anytime soon at my customers. However, if you need 400G this might be the switch for you.

NOTE: ACI 5.2 will likely be the first ACI version in the 5.x train you might consider to upgrade to. Don’t forget that ACI 5.0 dropped support for Gen 1 hardware. If you have gen1 hardware still in your fabric DON’T upgrade until you replaced those devices.

Operational improvements

In ACI 5.2 several operational improvements are included.

Upgrade process

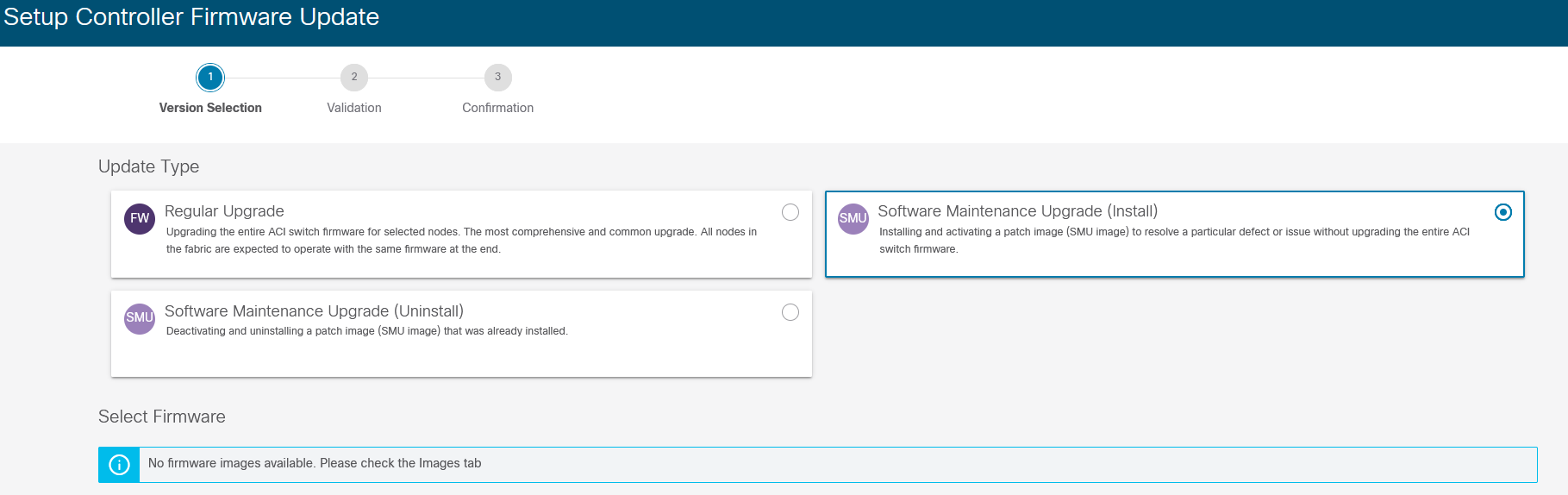

In my post about ACI 5.1 I already wrote something about the upgrade process which had been changed. Again there have been some modifications in the upgrade possibilities. Now you can apply SMUs. Many of you will already be familiar with the idea of SMUs as they enable you to apply patches to your running code. ACI did not support SMUs yet, but that has changed since ACI 5.2.

An SMU enables you to apply a patch without upgrading the complete ACI environment. In most cases this will also mean that you don’t have to restart the switch, only the affected process will be restarted. You can download the SMUs from the same location as where you download the full versions. As of this writing there aren’t any SMUs available yet, but they will likely appear soon. Once they do you can schedule an upgrade the same way you already know.

ACI SMU upgrade window

ACI SMU upgrade window

Another added feature for ACI 5.2 is the auto-EPLD upgrade. I don’t know whether you ever converted an NX-OS switch to ACI mode, but if you have you will have seen an error in your ACI fabric telling you that the BIOS of your switch is incorrect. The reason for this is that when you convert a switch from NX-OS to ACI mode the BIOS/EPLD will not be upgraded. The problem will disappear as soon as you upgrade the switch from within the fabric, but it is annoying nonetheless. As of ACI 5.2 the fabric will recognize this issue and will force the switch to update its EPLD when it comes online with a mismatching EPLD version. Thus automatically removing that problem.

ESG membership on additional parameters

Endpoint Security Groups have been around since ACI 5.0. In that release you could only add endpoints to an ESG based on its IP address. Though interesting, this severely limited the useability of ESGs. What you want is dynamic ESG membership (or at least, that’s what I want). Since ACI 5.2 it is possible to assign endpoints to specific ESGs based on tags. This means you can tag an endpoint and have it automatically be placed inside an ESG. This means that we can now dynamically isolate endpoints using tags, without configuring the microsegmentation option within ACI.

We can also tag devices based on their role. So now we can put webservers in the webserver ESG and database servers in the database ESG. One thing to note, tags are set on VMs, not on physical servers.

NOTE: Keep in mind that if you are using multi-site you can’t configure ESGs from the MSO (or NSO as it will henceforth be known)

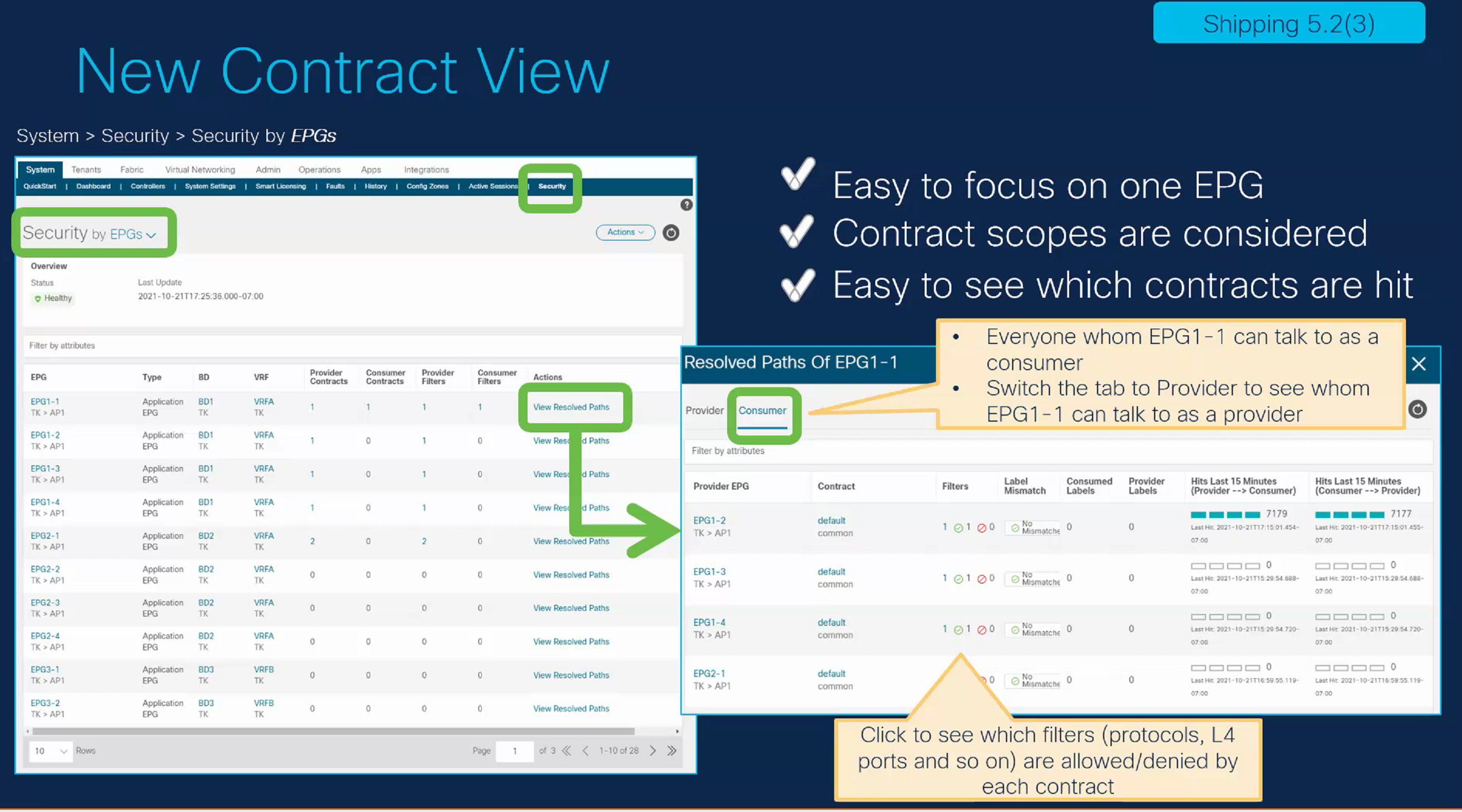

New contract viewer

When providing and consuming many contracts ACI isn’t the easiest platform to operate. Contracts easily get messy and additional tools are required to gain a better understanding of which contracts are used where and what traffic is allowed. This is one of the reasons the ACI contract viewer app is so popular. Cisco improved the ACI GUI to natively give more information about the contracts as can be seen in the image below.

ACI Contract view (copyright Cisco)

ACI Contract view (copyright Cisco)

BGP Site of Origin support

It is now possible to configure a BGP SOO extended community. The SOO community identifies the site from which a BGP route is learned. This helps prevent loops in BGP networks. The SoO community is configured under the L3out configuration. For some of my customers this is a very welcome addition to the L3out configuration.

New design considerations

ACI 5.2 brings us several features that might impact the design of ACI fabrics going forward.

Back to back Spines for multipod

This is the feature I’m most excited about. 90% of my customers run multi-pod. Of those 80% run multi-pod on just two locations. All those customers needed an IPN network just to connect four spines together. Of course, some of those customers used an existing network to connect the spines, using a dedicated VRF. Many of my customers however bought dedicated IPN switches.

As of ACI 5.2(3) we’re able to directly connect the Spines together, somewhat similar as the 2-site multi-site design. This means we can get rid of the IPN switches going forward for new customers. This will save a significant amount of money in these projects. This design is limited to two pods. If you need three pods, remote physical leafs or anything else that would require an IPN you can’t do back to back multi-pod. You can’t combine an IPN and a back to back multipod design.

Cisco already published the knowledge base article on this design here

As for the design it is recommended to create a full mesh of connections between the two pods as can be seen in the image below. However, this is not a hard requirement. You can do a square design when you’re constrained in the amount of fiber you have available between the locations. My advice would be to do a full mesh between the two pods if possible.

Please note that when I say full mesh I mean between the two pods and not within a pod. Spines in Pod1 do not connect to each other, same goes for Pod 2.

ACI back to back multi-pod(copyright Cisco)

ACI back to back multi-pod(copyright Cisco)

Going forward I expect to deploy this a lot.

Something you might wonder about is whether it is possible to upgrade from a back to back design to a full IPN design. For example when you do get a third pod. The answer is yes. You can upgrade to a full IPN design. This does require a change window as the upgrade won’t be without downtime. You can also remove an existing IPN network in favor of the back to back design. To be fair I would not recommend you to do that if you already have an IPN. However, if your IPN is end of support or something you could consider moving to a back to back design to save money instead of buying new IPN switches.

BGP support for the IPN

If you still need an IPN for your environment, but you don’t want to use OSPF you can now use BGP for your IPN connections. You do need to use eBGP. iBGP is not supported in this design.

Aside from another routing protocol the IPN will remain the same here, so I don’t really have anything to add here.

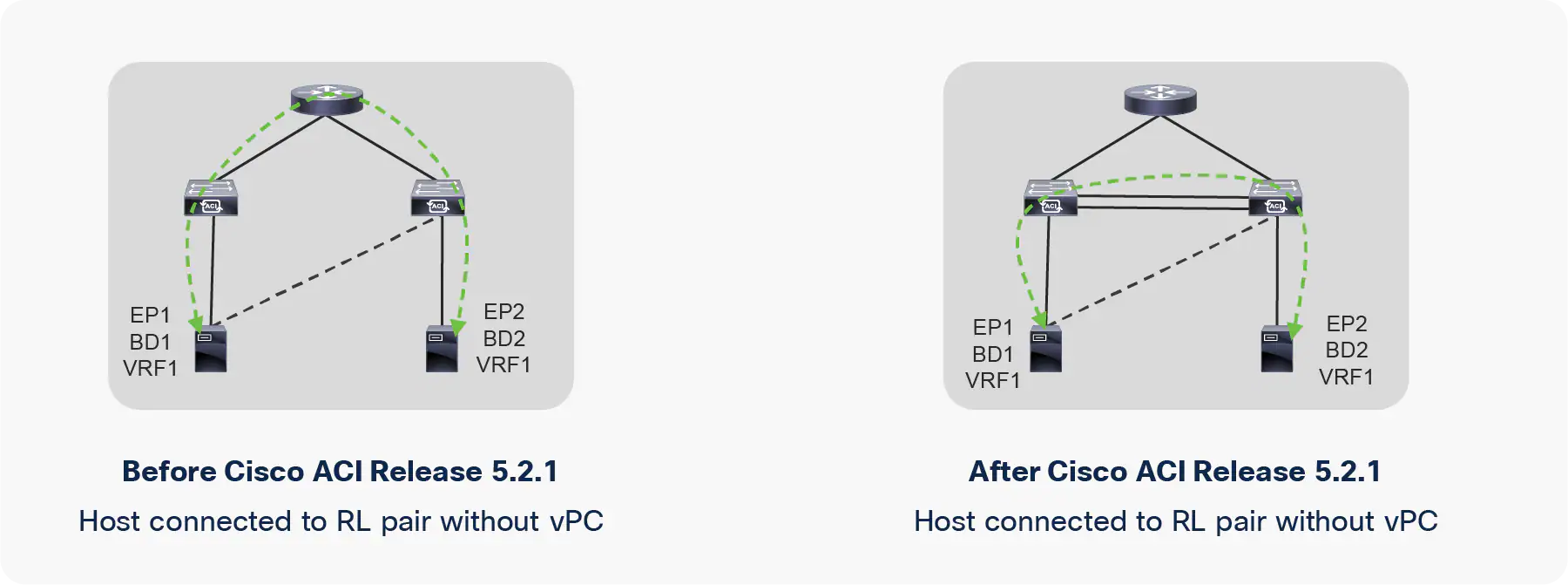

Peer link between remote physical leafs

It appears that the theme for ACI 5.2 is connecting similar devices to each other. We’ve learned that we’re not allowed to connect spines directly to each other, which we can now do in a back-to-back multi-pod. And we’ve learned that we’re not allowed to connect leaf switches to each other. That, however, is exactly what we can do for remote physical leafs as of version 5.2.

By connecting remote physical leafs to each other we’re not dependent on the IPN switch anymore for traffic between orphaned ports on a single remote leaf pair. That way the availability is improved.

The image below shows the traffic flow between twp endpoints connected to remote leafs before ACI 5.2 and after 5.2

Remote leaf peer link (copyright Cisco)

Remote leaf peer link (copyright Cisco)

Remote APIC cluster

This is a pretty weird one. I’m not entirely sure about the use case here, but since Cisco is customer driven there must have been customers asking for this. As of ACI 5.2 it is possible to manage an ACI fabric while the APIC cluster is not physically connected to it. This way you can place your APIC cluster somewhere else. As far as I’ve been able to determine it still is not possible to use a APIC cluster to manage multiple ACI fabrics, so there is still a one-to-one relationship between APIC cluster and ACI fabric.

Furthermore this feature is only possible for greenfield fabrics. That means that you can’t up and move your existing APIC cluster for your existing ACI fabric to somewhere else. The APICs will be connected via an L3 network (IPN) to the Spine switches in this case. Cisco published a Knowledge Base article

Additional features

As if the above list wasn’t big enough yet here are some more features:

Endpoint learning toggle per subnet

Where we could disable endpoint learning for a full VRF or a single IP in prior versions of ACI, we can now disable it for a subnet. This gives us more flexibility in the configuration of endpoint learning. This is especially useful in subnets that are used for loadbalancers or active/active failover clusters.

PBR improvements

There is a list of PBR improvements in ACI 5.2:

- Dynamic MAC address resolution for PBR targets

This enables us to configure a PBR target without manually configuring its MAC address. Regular ARP will be used to resolve the MAC address belonging to the device. Especially in failover clusters this eases configuration.

- PBR on L3outs

We can now deploy PBR service graphs directly within an L3out

- PBR URI probes

You can now use HTTP URI’s to track the availability of a service node in a PBR.

Another thing to note which is not really PBR, but service graph related is end of support for device packages. I don’t think anybody ever really used them, but if you do, keep that in mind before upgrading to 5.2.

Security features

Aside from the above features there are also some additional security features included in 5.2:

- FIPS level 2

- OAUTH support

- RNG support

- Option to disable the USB port on switches

- MACsec support on GX2 switches

- Hold timer for Rogue EP

- Encryption for syslog (likely in 5.2(4))

- Secure Erase (5.2(4))

- MCP strict mode (5.2(4))