Cisco ACI and VMware NSX-T integration using ACI version 5.1

It has been possible to integrate VMware vCenter with ACI for years. However, more and more environments are dependent on NSX-T to manage their VMWare infrastructure. They use NSX to deploy port groups and more.

When your environment runs both ACI and NSX-T you might be interested in integrating these two. There are some benefits to this integration. Most benefits are the same as the benefits you get from integrating ACI and vCenter.

Part of my job is designing and implementing ACI networks for customers. Most customers I work for have decided to fully go for ACI, that means that most customers I work for don’t also implement NSX-T (or NSX-V for that matter). Some of my customers have chosen to use ACI as an underlay for the NSX network, but this is a very small minority. This means that I do not have a ton of experience with NSX-T, but luckily I do have access to a NSX-T lab which I used to implement this integration.

I will not go into the discussion whether NSX-T or ACI is better and why. There’s a lot to be said for both solutions.

What do I mean by integrating ACI with NSX-T?

When you search the internet on integrating ACI and NSX you will (at least at this point in time) find a lot of blogs and articles about using ACI as an underlay for NSX. This is not integration. Integration means that these systems interact with each other and are able to influence each other. Just running one on top of the other isn’t integrating.

Since ACI version 5.1 ACI supports actual integration. This means that ACI uses the NSX-T Northbound API to read information from NSX-T and if you like configure NSX-T for you. This is similar to the vCenter integration which has been present for a long time. This API integration means that it is now possible to get information about which VMs live in your virtual environment and to configure and modify the network constructs within NSX-T.

Benefits of integrating ACI and NSX-T

Integrating ACI with NSX-T gives you some advantages. Just having some insights into what lives within the virtual world can be very interesting for network administrators. This will help during troubleshooting of problems and getting a general insight into the health of the network.

Being able to configure constructs within NSX-T might give the network team back its focus on networking, but this removes part of the work for the virtualization team. In my experience some companies have networking and virtualization teams that work together closely, but others have teams with thick concrete walls between them. Depending on your organization being able to configure NSX-T from within ACI might be seen as stealing work from another team, or helping each other get the best out of the infrastructure. This isn’t any different from integrating with vCenter.

One of the clearest benefit I can see of integrating ACI with NSX-T comes from integrating the environment with SD-A as well. ACI and SD-A integrate flawlessly (especially when looking at phase 2 integration). This gives you external EPGs within ACI which contain all clients within the campus. When you integrate ACI with NSX-T you get a representation of a virtual network within NSX-T in ACI in the form of an EPG. That means that it is possible to create an end-to-end policy from the campus all the way into the virtual environment. This can of course also be achieved by different means, but integrating these three platforms together makes it a lot easier.

Another advantage of integrating ACI and NSX-T is that you create more flexibility in connecting different workloads into a single policy group. When you have NSX-T on VMware (and maybe some bare metal systems), ACI integrated with KVM (which actually works better than the current state of affairs in regards to integrating NSX-T with KVM), or other virtualization platforms and cloud environments. All these workloads, living in different kinds of systems, albeit bare metal, cloud or hypervisors, can become part of a single EPG. This would create a single policy enforcement and management point.

Configuring the Integration

Let’s dive in. To configure this we need to do stuff in both ACI and NSX-T, so don’t be scared when you see NSX-T screenshots :-).

Prerequisites and limitations

Some things before we start:

- ACI version 5.1(1) or higher

- NSX-T version 3.0 or higher

- NSX-T has been configured and deployed to ESX hosts connected to the ACI fabric

- If you haven’t already, configure a vlan pool and AAEP for the new domain

Currently the integration only supports vlan based connectivity. Connections based on VXLAN are not suppported. That means that within NSX-T you will get a vlan based transport zone.

Information gathering

You need to gather some information before you start:

- IP address or hostname of NSX-T

- Username and password for the ACI account on NSX-T

- Name for the ACI transport zone in NSX-T (this will be the VMM domain name on ACI)

- Names of the vlan pool and AAEP you will use

Configuring ACI

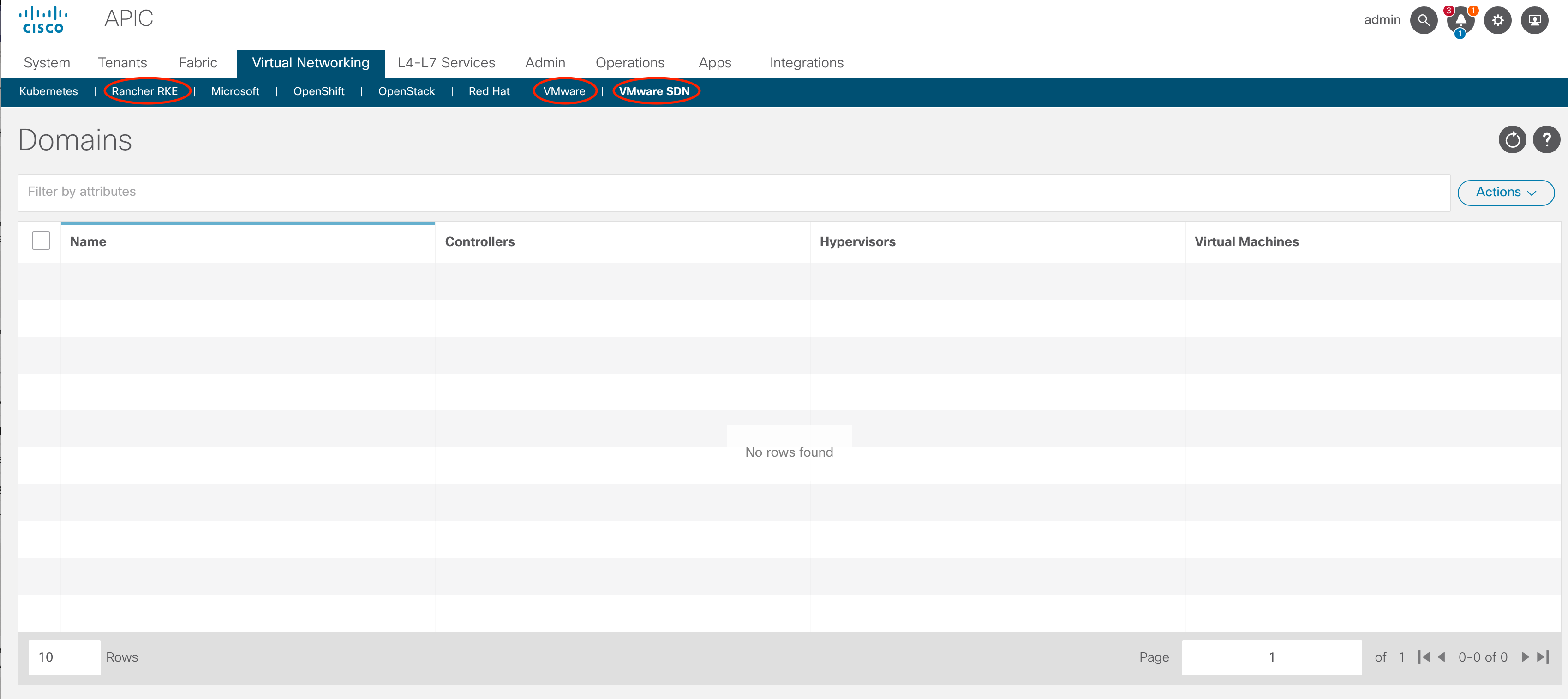

In ACI, go to Virtual Networking. If this is your first time in ACI 5.1 you will notice that the layout of this page has changed. In the blue menu at the top of the page you will see several different VMM domain types. The last one is VMware SDN. I think Cisco refuses to use the NSX name in this page, but that is what is meant by this. Please click on that page.

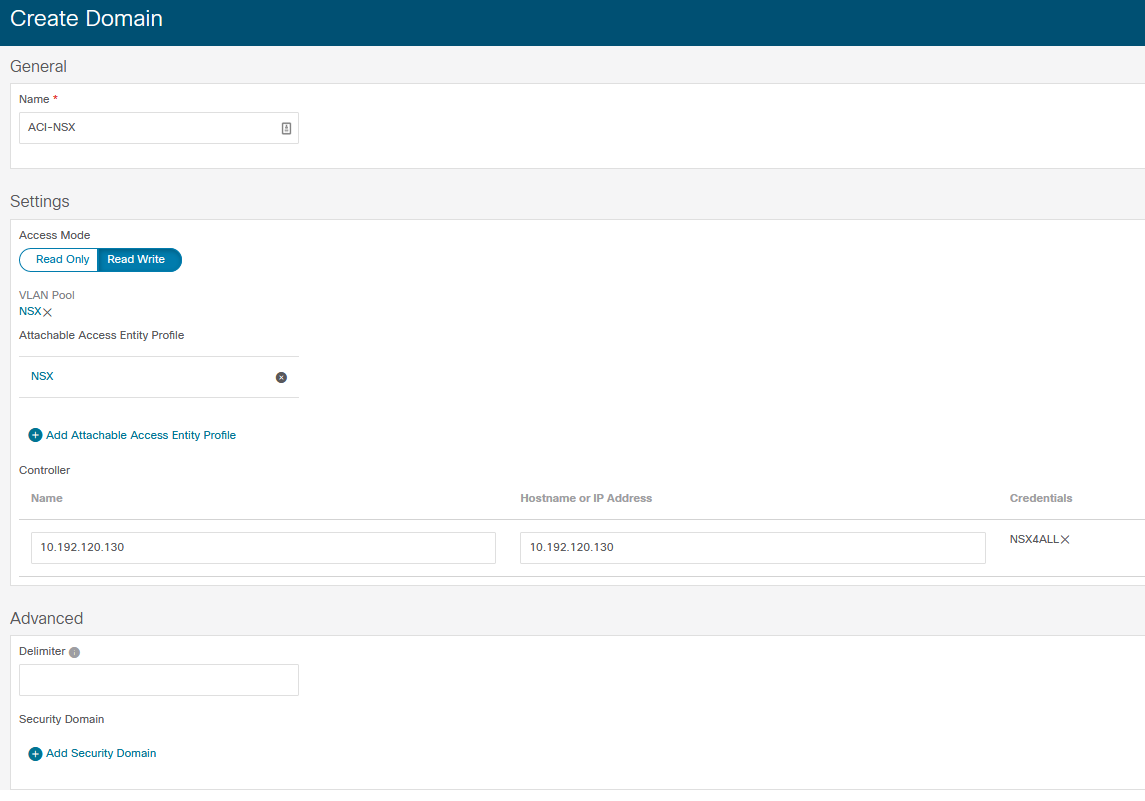

Here under actions you can create a new domain. When you do that ACI will show you the “Create Domain” page. On this page you have some recognizable things to configure. You need to configure the name, the vlan pool and the AAEP here.

You also need to add the NSX-T controller IP address and credentials. In the screenshot below you can see the domain as I’ve created it. I called it ACI-NSX and chose the read-write mode. This is the only mode in which ACI writes configuration to the NSX-T controller.

You can also configure some advanced features, like the delimiter and the security domain used. I chose to keep these default.

The base configuration for ACI has been done. The integration has been created on the ACI side, and if everything has been performed correctly ACI should already have pushed some configuration to NSX-T. Now we need to modify some configuration within NSX-T to be able to use the integration.

Configuring NSX-T

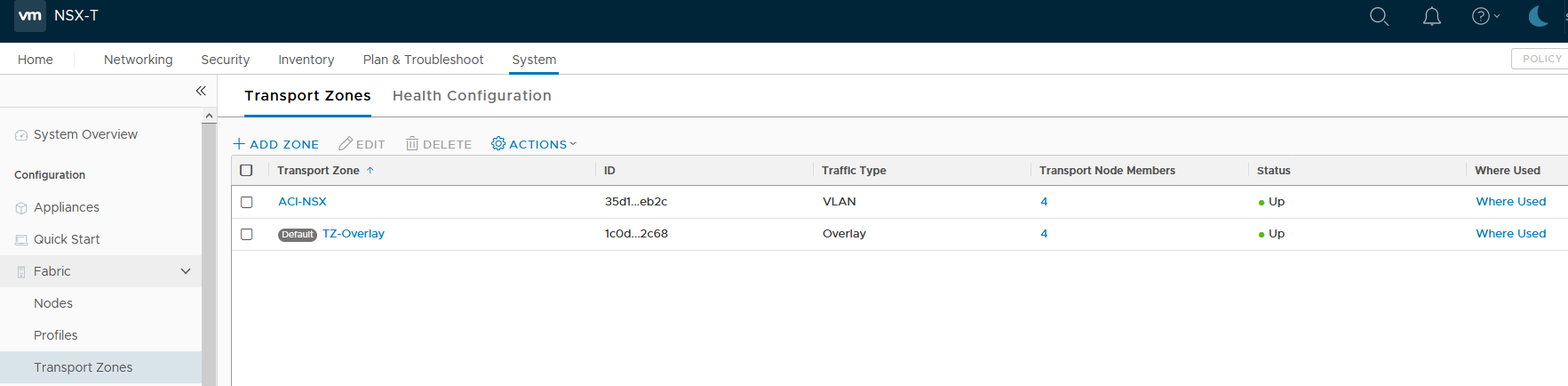

If, what we’ve done is correct we should be able to see the created transport zone in NSX-T. For this we need to navigate to System, Fabric, Transport Zones. There we should see all the transport zones available within our NSX-T fabric. In my example we only have the default TZ-Overlay and the recently created ACI-NSX zone. You can see that the traffic type is VLAN. In the screenshot below you can also see that I’ve already configured the 4 nodes of my ESX cluster to be part of this Transport Zone.

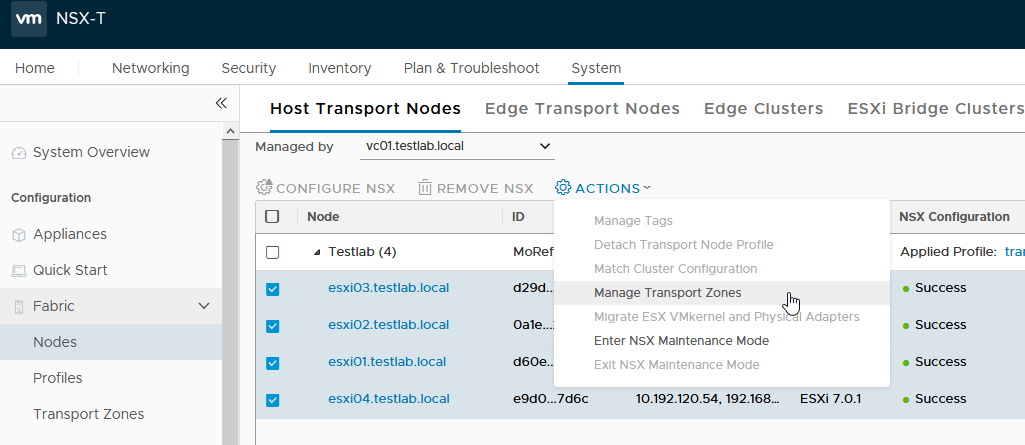

I already added the Nodes to this transport zone, but you will still have to do this. For this you have to go to System, Fabric, Nodes. Here by default you will see the “Host Transport Nodes” page. This is the right page to be at. Depending on your configuration you might see some nodes listed here, or you might not see anything. A seasoned NSX-T administrator will know that if hosts are managed by a vCenter they will only be shown when the right vCenter is selected. In my case all nodes are managed by vc01, so I have to select vc01 at the “Managed by” line. Once you see all required nodes you can select them and choose “Manage Transport Zones” from the Actions drop down menu.

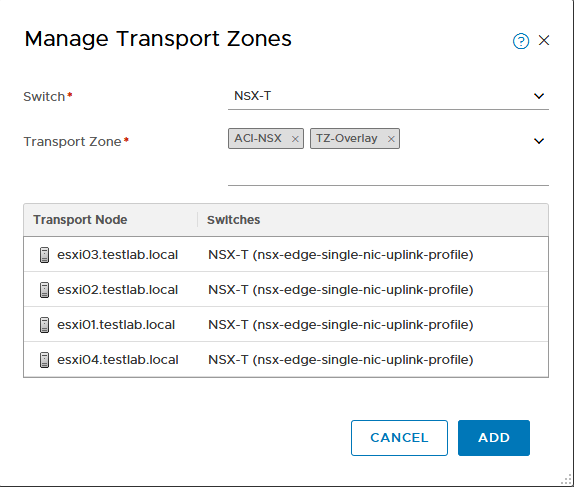

Here you need to select the right Transport Zones.

Be sure that the transport zone is attached to the right virtual switch (managed by NSX-T).

Using the integration

Now the integration has been built it should be possible to push EPGs to the virtual switch managed by NSX-T. This is done in the same way as you’re used to be doing with regular VMware integration. Just make the EPG member of the right VMM domain and the EPG should appear in the virtual switch on the ESX host.

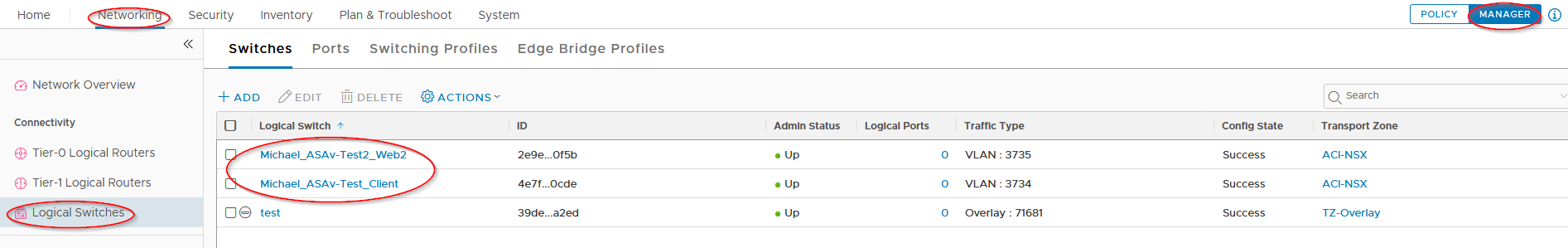

The EPG will be created as a layer 2 segment within NSX-T. However, when logging in to NSX and navigating to “Networking, Segments” you will not see the segments as created by ACI. For some reason (I really don’t get this) NSX-T has two GUI modes. Policy mode and Manager mode. Each mode shows slightly different information. When changing the mode to Manager mode, the “Segments” menu item disappears, but is replaced with “Logical switches”. This is where the EPGs can be found:

Within vCenter you can now assign VMs to these port groups.

Notes

Some things to note that I encountered during configuration. Documentation of this feature is still very limited. There is just a single page about this on Cisco’s website, and when I was building this there weren’t any blogs out there (that I could find) describing this integration. Another thing I found is that it is difficult to troubleshoot. Largely due to the limited documentation available it’s difficult to figure out what is wrong.

For example. My first attempt stranded on (probably) account issues between ACI and NSX. Unfortunately I could not find anything about this. There is also no information on which rights are required within NSX. That means that for now we’re just using an administrator account with all rights within NSX, but for a production environment that’s not what you want to use. Furthermore the documentation does not list what is required on the NSX side of the configuration. This might be reasonable, since the documentation has been made by Cisco, but more information, or at least a list of things would be nice.

Last of which is that the current documentation does not list any troubleshooting steps. You have to figure those out yourself.

References

ACI and NSX-T integration is one of the new features of ACI 5.1. If you want to learn more about the new features in ACI 5.1 please read my post: ACI 5.1 New Features

Other references: