ACI Release 4.2 New Features

About a month ago Cisco released ACI version 4.2. Currently we’re at 4.2(2) and it contains a lot of new features. Most of these features are geared towards the ACI anywhere concept.

Since I didn’t cover the new features of ACI 4.1 (as I was very busy studying for my CCIE at the time) I will include some of the features introduced in 4.1 too, I’ll let you know whether it is a 4.1 feature or a 4.2.

eBGP Multipath

I’ll even start with a 3.2 feature that was introduced in 3.2(7), namely eBGP multipath Relax. This feature is (of course) only relevant if you use eBGP for your L3outs. eBGP multipath relax is a hidden feature in regular IOS, but now it’s configurable in ACI. Normally when using eBGP the routing process only allows multipath if the paths originate from the same AS. eBGP multipath relax allows you to use multiple BGP paths even if the AS’es supplying the path are different. This means that you can multipath between two equal cost paths from ACI.

Remote physical leaf optimizations

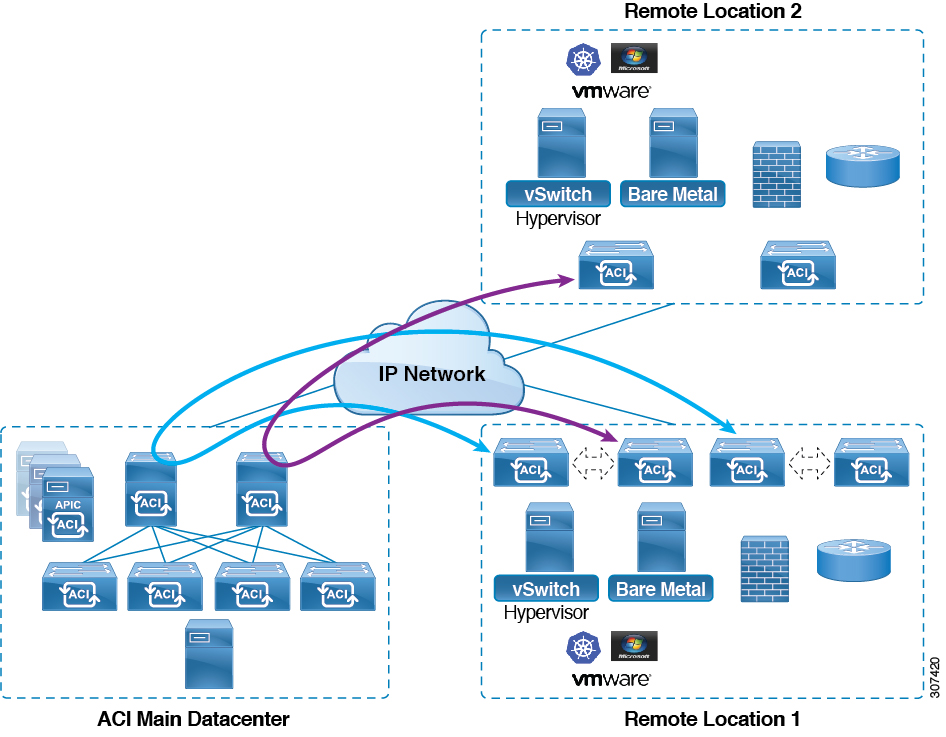

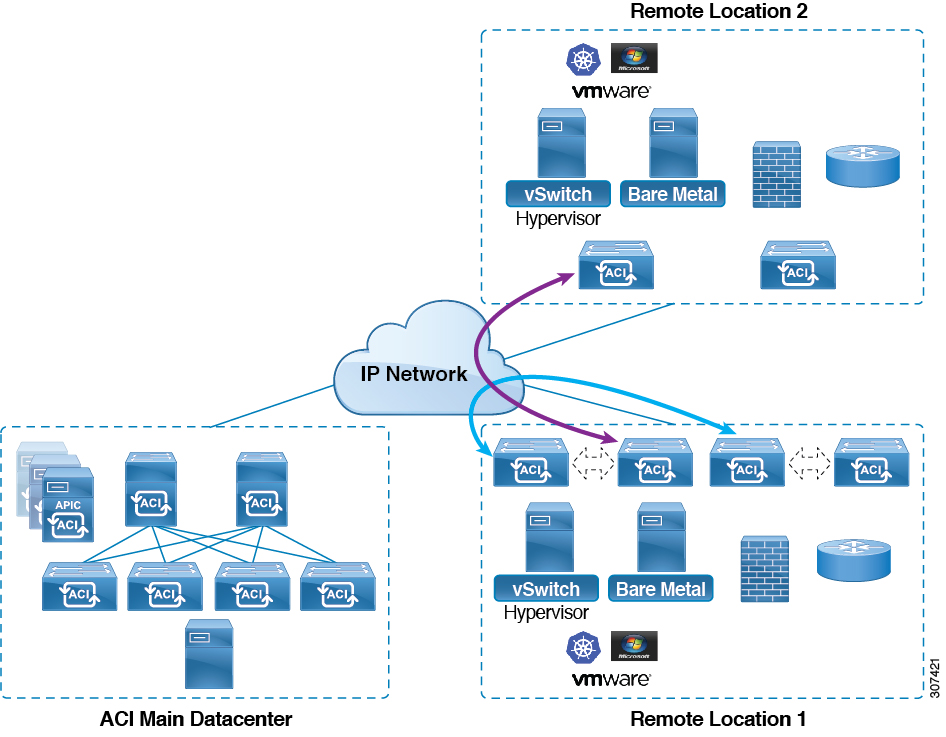

A few versions ago (3.1 or 3.2) ACI introduced the concept of remote physical leaf switches. These switches were part of an ACI pod, but were located on a remote site. The main advantage of this construct was that you didn’t need any Spines on the remote site. This made it possible for small satellite sites to be connected in a fairly cheap way. The downside of this setup was that when two remote leaf sites needed to communicate with each other it had to traverse the spine in the home site. This created a hairpin traffic pattern, as shown in the image below. This was even the case when two remote-leaf pairs (they are always added in pairs) were located in the same site.

Since 4.1.2 it’s possible to skip the spine in the traffic flow, but just forward the information within the IPN. This makes for a much more efficient traffic flow.

Another new feature since 4.1.2 is that remote physical leafs can be added in multi-site topologies where the remote leafs are connected to the different sites. 4.1.2 also increased the amount of remote leaf pairs to 64 instead of 32.

4.2 in itself doesn’t have many remote leaf optimizations. It does add support for the new switches and some features are listed on the roadmap. However, I haven’t seen those on public documents so far, so I’m assuming they are under NDA.

Multi-Site optimizations

ACI 4.x is all about ACI anywhere and multi-site definitely is a part of that concept. So it is expected to see a lot of improvements covering multi-site. When ACI 4.0 was introduced it contained the option to create a mini fabric with virtual APICs (vAPIC). That fabric was limited at the time to a single site. Since version 4.1 you can use mini-aci fabrics to create a multi-site topology. This is ideal for lab environment like my own.

ACI 4.1 also introduced L1/L2 PBR service graphs in a multi-site environment and support for Preferred Groups (I don’t use those, I’d rather use allow ip any any contracts if needed, but it might help you in large scale fabrics to remove some contracts I guess)

The biggest addition in multi-site in 4.1 was the support of AWS as a remote-site. You could use the MSO to create a new site in AWS. This appeared for all intended purposes as a normal ACI site in your MSO. ACI 4.2 extends this into Azure as well. So now you can use both AWS and Azure as remote sites, but the biggest news in this is Cloud Only ACI. You can deploy a site in Azure and AWS without an on-prem ACI environment. You do still need MSO to be on-prem, but it can be expected that this too will one day move into the cloud.

On the other hand Cisco is adding a lot of additional features in the cloud integrations or optimizing existing ones. Too much to go into to be honest.

Another important update to the multi-site part is the added support for intersite L3outs. Up to version 4.2 you had to have a local L3out on each site in a multi-site setup. This requirement has been dropped. This means that in case of a failure of your local L3out you can use the L3out in another site. Another possibility would be creating an active / passive firewall cluster across sites.

Floating SVI L3out

The floating SVI L3out is for the specific use case in which you have virtual routers in VMware (on a VDS). In versions prior to 4.2 you had to configure the SVI on all leaf switches that could possibly be connected to the virtual router. This required a lot of configuration and could even cause outages when ESXi clusters were added to leaf switches without the specific configuration. This was because the SVI was bound to physical interfaces. The floating SVI removes this relationship making a free roaming L3out a possibility. Use cases that might come to mind are virtualized firewalls or NSX edge gateways. More information about this feature can be found here

Services Engine

You will probably have heard about the APIC-X. This new function for an APIC has already been deprecated and needs to be replaced with a Services Engine. This is because the APIC itself was not powerful enough to run all applications it should have. (Looking at you NIR and NAE!) Several deployment options will be available for the Services Engine, including a Virtual Instance. This one however will primarily be targeted at lab and demo environments. For actual production environments you’ll need the Physical clusters.

Other new features

- PIMv6

- BGP shutdown and soft-reset

- L4-L7 support in vPOD

- Local L3out in vPOD

- VipTela integration

- Several new switches