ACI Release 5.0 New Features

Yesterday, on the 14th of May 2020 Cisco released ACI 5.0. The fifth major release of ACI. This post will explore some of the new features to be found in this version. And there are some major new features to be found in this version. I’m especially excited about the possibility to create true physical multi-tenancy and ESG’s.

But there’s more to be found. Let’s start.

Hardware and Scale

ACI 5.0 is the first version of ACI ever to drop support for existing hardware. If you have Gen 1 hardware in your ACI environment it’s likely not supported anymore. Check the End of support notices for your hardware to be sure. Preparing for the release of ACI 5.0 I have asked and received for budget to replace our existing lab environment as this was largely based on gen 1 hardware. So I know for sure that the Nexus 9336PQ (aka Baby Spine) and the Nexus 9372 leaf switches are not supported anymore. (Installing 5.0 on them might work, but is unsupported)

ACI 5.0 now supports the Nexus 9736C-FX and 9736Q-FX modules with a 9508-FM-G fabric module. As far as I know there’s no other new hardware support.

As to scale, the most important one in my opinion is the increase to support 500 leaf switches in a multi-pod environment. (This requires a 7 node APIC cluster)

True Physical MultiTenancy

ACI has supported multi-tenancy since it’s inception. It has always been designed with multi-tenancy in mind. Many companies that use ACI have defined tenants based on different scopes. You might have a tenant Development, QA and Production. This is something I see a lot, but it isn’t the original thought behind tenants. You can easily have this separation within a single tenant and there are cases where this would be recommended.

The multi-tenancy model was more geared towards different management domains. A customer of mine has multiple tenants towards this end. They have the regular IT department managing the ‘regular’ tenant and a different department which manages it’s own tenant. The company I work for is a big international company and in some countries we also offer ACI Labs as a service, in which a customer gets it’s own tenant in which they can do several lab related things.

One of the biggest downsides of this model was that the tenant model was only geared towards the tenant policy model. So a tenant could manage everything in their own ACI tenant which was part of the tenant policy model. Physical ports however, are not part of the tenant policy model. This meant that, as a service provider you had two choices:

- Give the tenant access to the physical switches, potentially harming other tenants

- Manage the physical switches centrally and perform a change every time a customer requested one.

Both these choices are less than ideal, but as of ACI 5.0 you can use advanced RBAC to assign switches to a tenant. (The documentation refers to whole switches, but in sessions with Cisco I heard it might be possible to do this on port level too) This enables the tenant to manage the ports it has access to without impacting other tenants. Customers can now provide their own physical servers into the fabric without needing help from the overall administrators.

Endpoint Security Groups

Do you finally grasp the EPG concept? Yes, great. Now there’s something new. Endpoint Security Groups, or ESG’s are a abstraction over EPG’s on which contracts can be applied. So wait, now I have to apply contracts between ESG’s instead of EPG’s? As always, the answer is, it depends. The introduction of ESG’s enables you to choose when to use which.

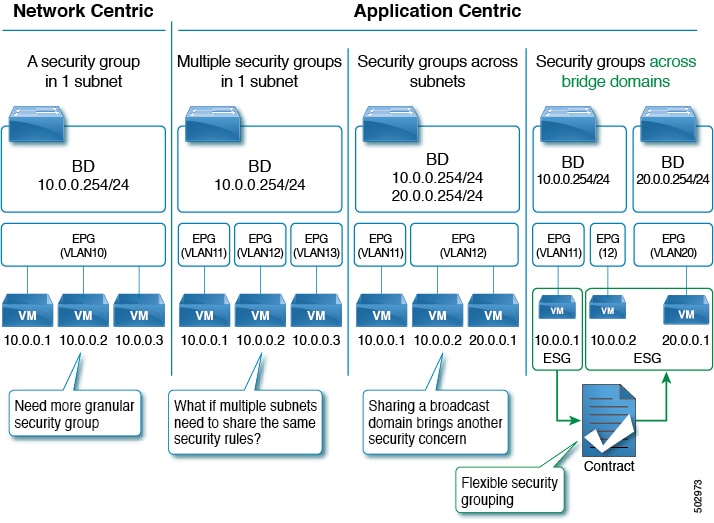

So what’s this ESG concept? Version 5 of the APIC security Configuration Guide explains this for us. It also includes a nice image that shows the ESG concept in action:

Before ACI 5.0 you had to define your contracts between EPG’s. This is fine when all systems on which you want to apply the contract reside in the same EPG. When creating a greenfield datacenter this is probably always the case, but unfortunately we live in a world full of brownfields.

Let’s create an example. Say you are a big university and you have a lot of applications. Back in the day you just put the servers for these applications in a vlan. Say vlan 10 - Servers. After a while this vlan (or more specifically the IP space) is full. So, here comes vlan 11 - Servers_2. You just go on building stuff like this, and before you know it you have application servers spanning multiple vlans.

Enter ACI. You migrate the existing network as is into ACI. So per vlan you create a bridge domain and an EPG. Between these EPG’s you allow all traffic. Problem solved right? But now the security department wants you to implement segmentation between the applications in these EPG’s (or vlans as the security department will likely still call them). Now you’ve got a problem. Will you move all these specific applications into their own EPG’s? Might be possible if you can renumber the IP addresses on the servers. But renumbering is often difficult and sometimes even impossible. So you might get stuck with several EPG’s, distributed over several Bridge Domains. All need to talk with each other and the systems providing or consuming services to or from those servers.

In previous versions of ACI you could use things like preferred groups to make it a bit easier, but the introduction of ESG’s enables you to group these EPG’s into a kind of clear superstructure which can have contracts applied to them.

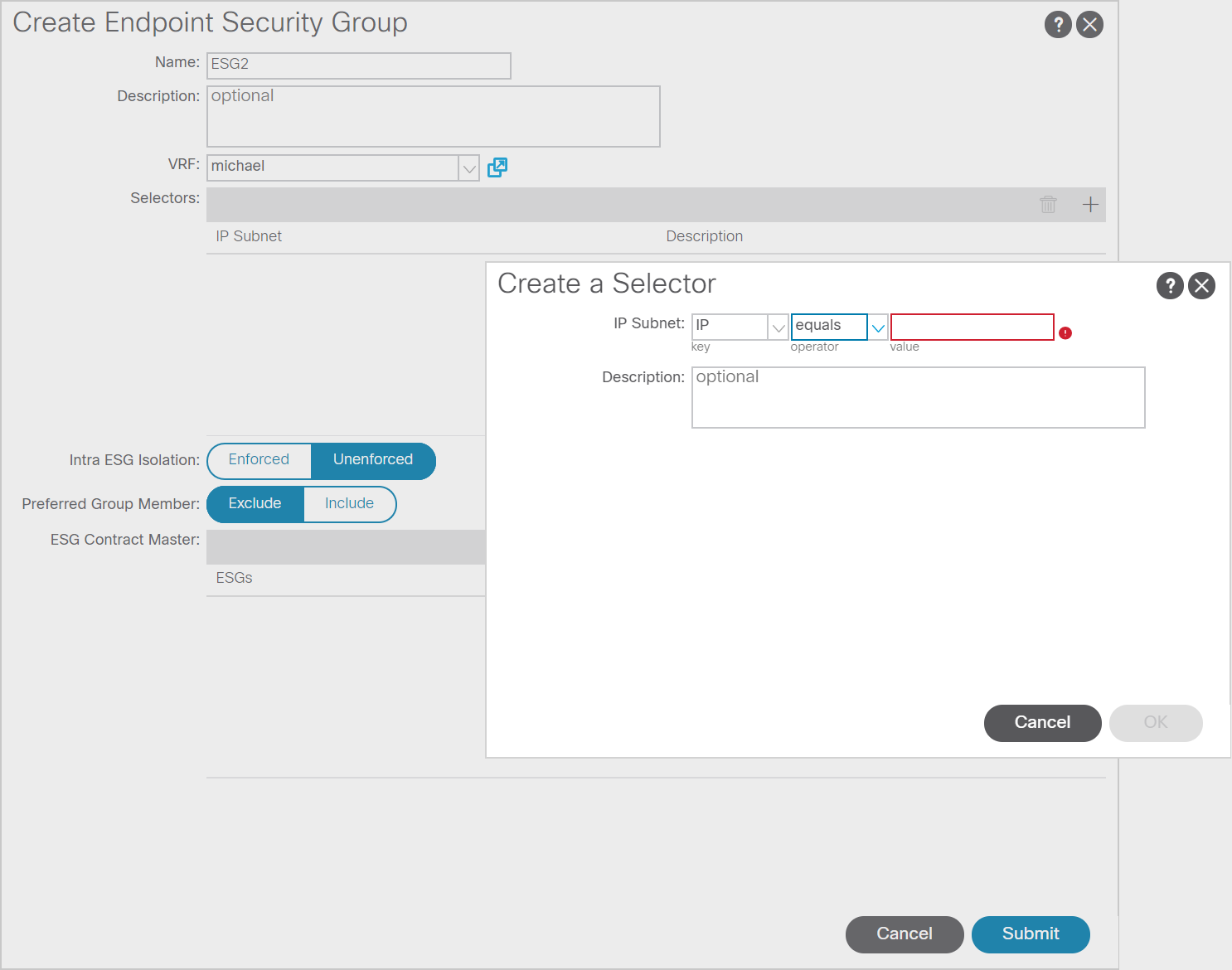

In the image below you can see the screen to create an ESG. As you can see you need to define a name, a VRF and a selector. The selector (at least for now) is IP based. So you need to define the IP address(es) of the various hosts that will be part of the ESG. I will look into this further in the near future, but it looks great so far. (Even better would be if we could use more selectors than just IP, but that might be in a next release.)

Update: I tested the ESGs in my lab. You can find my write-up here: Endpoint Security Groups Explored

Service graph improvements

There are several imrovements to the service graphs. As of version 5.0 it is possible to implement symmetrical PBR for L1/L2 nodes (this was already possible for L3 nodes). Symmetrical PBR means that a flow will always be sent to the same device.

You can now also rewrite the MAC address of redirected traffic. This means that the service device will see the MAC address of the Bridge Domain used to connect to the service device instead of the original MAC address of the frame. This will prevent the service device from learning the original MAC address on a specific port.

Another improvement is that you can now use unidirectional PBR when one of the EPG’s is an L3out.

Segment Routing

Unfortunately I don’t know near enough of Service Provider technologies, but ACI 5.x will have some service provider improvements. The first (of probably many) is segment routing MPLS handoff. Before ACI 5.0 you had to create a VRF lite route peering for each VRF you wanted to connect to a MPLS network. As of ACI 5.0 you can use Segment Routing for this.

Other improvements

- BFD improvements: ACI 5.0 supports multi-hop BFD and C-bit aware BFD.

- FEC improvements: New FEC modes are added to ACI and FEC autonegotiation is now available (which would have been a life safer in several TAC cases I had to date)

- DUO two factor authentication: DUO security is now supported as a 2FA provider to log in to the APIC

- Floating L3outs for physical domains: The floating SVI L3out configuration was dependent on VMM integration. This requirement has been removed. You can now use floating svi L3outs on physical domains too.